Are the best practice guidelines for effective prompt writing complete, or are they missing something? Do prompt generators really get it right? Can Large Language Models (LLMs) like Chat GPT and prompt templates create effective prompts, or is more needed? This article addresses these questions and argues that a greater focus on brevity is needed for effective prompt engineering.

Detail is necessary but makes it lengthy

While best practice guidelines usually advise using specific, detailed and precise prompts to enhance the quality of outputs, little is said about the optimising the length of a prompt. For example, Harvard University’s student guidelines suggest that, to refine the AI’s output, we should structure prompts with clear guidelines such as the desired genre, target audience, desired tone and “act as if” roles. No mention is made about brevity. Further, I have seen corporate prompt templates that provide highly detailed guidelines and encourage full sentence inputs. It seems we are being allowed or even encouraged to be unnecessarily verbose in our prompt generation.

Being polite adds unnecessary words

Sam Altman, CEO of OpenAI recently reported that the ‘pleases’ and polite expressions are not just unnecessary but also expensive, costing OpenAI millions in processing power annually and increasing environmental impact. Like many of us, to be polite, I used to add “please” to every ChatGPT request. This encourages us to keep prompts more concise.

But why is a longer prompt costing more?

Tokens have a cost

A token is the basic unit of text the LLM processes. A token may be a whole word, part of a word, an individual character, punctuation marks and spaces or special symbols. The longer the prompt, the more tokens used, the sooner your daily token allocation will run out, and your bill will increase.

Different LLMs use different ways to code text, so without back-end knowledge, it is difficult to know how many tokens are being used in any one prompt. As a rule of thumb, however, ChatGPT (OpenAI), Perplexity.ai and Gemini (Google) use 4 letters per token when processing English text. Full-stops (periods) and spaces may be counted as separate tokens.

So, we need to minimize the use of tokens in our prompts by compressing our message to minimise processing demands and costs.

Do Prompt Generators get it right?

Prompt Generators are tools specifically designed to enhance prompt clarity by turning vague ideas into more precise instructions. As an example, try the free prompt generator by originality.ai (https://originality.ai/blog/ai-prompt-generator).

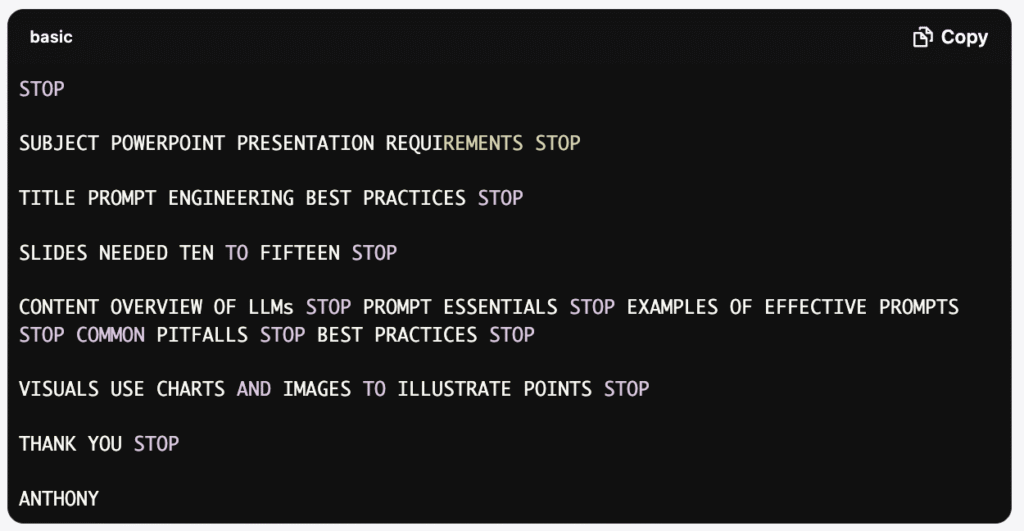

Using this tool, the simple prompt: “Create a PowerPoint of 10-15 slides on Prompt Engineering Best Practices” generated a 20-line ‘enhanced’ prompt, part of which is as follows:

**Prompt for AI Presentation Generator:**Create a PowerPoint presentation consisting of 10-15 slides focused on “Prompt Engineering Best Practices.”The slides should include the following elements: [details omitted]Ensure that each slide is visually engaging with appropriate images, graphs, or charts, and that the text is concise and clear to facilitate audience understanding.”

Note how this prompt cuts unnecessary spaces between sentences. Yet greater brevity could be achieved by cutting out unnecessary words without impacting the underlying message.

Is Chat GPT any better?

Similarly, Chat GPT, when instructed to “Act as if you are a “best-in-class” prompt generator. Give an enhanced prompt for the following: ‘Create a PowerPoint of 10-15 slides on “Prompt Engineering Best Practices“, gave a detailed, 235-word prompt including the following:

- “Design Style: Use a modern, clean, and professional template. Include relevant icons, diagrams, and screenshots. Prefer dark-on-light text, consistent fonts, and subtle animations.

- Tone: Educational, authoritative, but accessible.”

Again, quite wordy.

If shorter prompts save costs, it would seem that Prompt Generators and GPTs need to be trained to be more concise. After all, as author George Orwell wrote in his rules for effective writing, “If it is possible to cut a word, cut it out.”

Remember Telegrams?

In the early to mid 1900’s, messages were sent by telegrams delivered to the door by a postman. Telegrams were expensive to transmit, and short standard formats were used to minimise user costs. Our prompt in telegram form might have read:

SUBJECT POWERPOINT PRESENTATION STOP TITLE ENGINEERING BEST PRACTICES STOP SLIDES TEN TO FIFTEEN STOP CONTENT OVERVIEW OF LLM STOP PROMPT ESSENTIALS STOP COMMON PITFALLS STOP BEST PRACTICES STOP USE CHARTS AND IMAGES TO ILLUSTRATE STOP

The style was clear, direct and precise. Most importantly, telegrams were as concise as possible and written in a way that the receiver could infer, interpret and easily understand the message.

So, can telegram-style brevity be used to create better prompts? Can we even cut out the spaces between words without losing the integrity of the prompt? Can Chat GPT or our AI Agent interpret highly redacted prompts such as:

CreatePPT, 10-15slides, charts/images. Title:”PromptEngineeringBestPractices”. Content: LLMOverview, PromptEssentials, EffectivePromptExamples, BestPractices, Summary.

LLMs can infer what is missing

It turns out the answer is yes! Chat GPT reports LLMs can understand this style of prompt reporting “the LLM receives all necessary information, presented in a way it can understand and able to infer what is missing”. To test this, I input the above prompt into an AI agent PowerPoint builder and it worked fine (see comments for link to output).

Further compression of our prompt may be possible by removing all spaces or punctuation, however this risks making the prompt ambiguous or harder for the LLM to parse, which can reduce output quality or cause misinterpretation.

Revising Prompt Best Practices

Our new best practices for prompt engineering will need to include how to write them in ways that is not only clear, precise, detailed and unambiguous, but also cuts out whatever we possibly can.

Here are suggested new additional guidelines:

- Ensure very word is essential; no redundancy.

- List items with commas or semicolons instead of full sentences

- Use standard abbreviations (e.g. PPT) – the LLM can understand them

- Remove all filler words.

In conclusion, it is time to reconsider our prompting best practices, review our AI agent prompt templates, and even retrain our AI-based prompt-generating tools to write prompts with both clarity and brevity in mind that reduce the use of tokens used.

In our daily prompt writing, we can aim reduce the number of words used. Prompt generators and GPTs can be trained to provide an even more abbreviated style when recommending prompts.

Effective prompts are not only clear, specific and precise, but also concise. Brevity minimizes the number of tokens used, lowers costs and reduces environmental impact.

——

For the PowerPoint presentation created from the short prompt, see https://www.hrsolutions.com.hk/wp-content/uploads/2025/07/PPT-from-Prompt-by-genspark.ai-example.pdf.